Language model, N-gram, Natural language processing, Speech recognition, Information retrieval, Computer vision

GPT-3 Language Model: A Technical Overview

RT @LambdaAPI: "A single training cycle for the 175 Billion parameter model takes about 355 years on a single V100 GPU, or around $4.6M using on-demand Lambda Cloud GPU instances."

https://t.co/m9oRpDzXFT

OpenChuan Li, PhD reviews GPT-3, the new NLP model from OpenAI. This paper empirically shows that language model performance scales as a power-law with model size, datataset size, and the amount of computation.

RT @LambdaAPI: "A single training cycle for the 175 Billion parameter model takes about 355 years on a single V100 GPU, or around $4.6M using on-demand Lambda Cloud GPU instances."

https://t.co/m9oRpDzXFT

OpenGPT-3 Language Model: A Technical Overview

Chuan Li, PhD reviews GPT-3, the new NLP model from OpenAI. This paper empirically shows that language model performance scales as a power-law with model size, datataset size, and the ...

We are sorry, we could not find the related article

If you are curious about Artificial Intelligence News Essentials and Robotics

Please click on:

Or signup to our newsletters

Novel Methods For Text Generation Using Adversarial Learning & Autoencoders

Just two years ago, text generation models were so unreliable that you needed to generate hundreds of samples in hopes of finding even one plausible sentence. Nowadays, OpenAI’s pre-trained ...

NLP Research Highlights — Issue #1

Introducing a new dedicated series to highlight the latest interesting natural language processing (NLP) research.

Click here to read the article

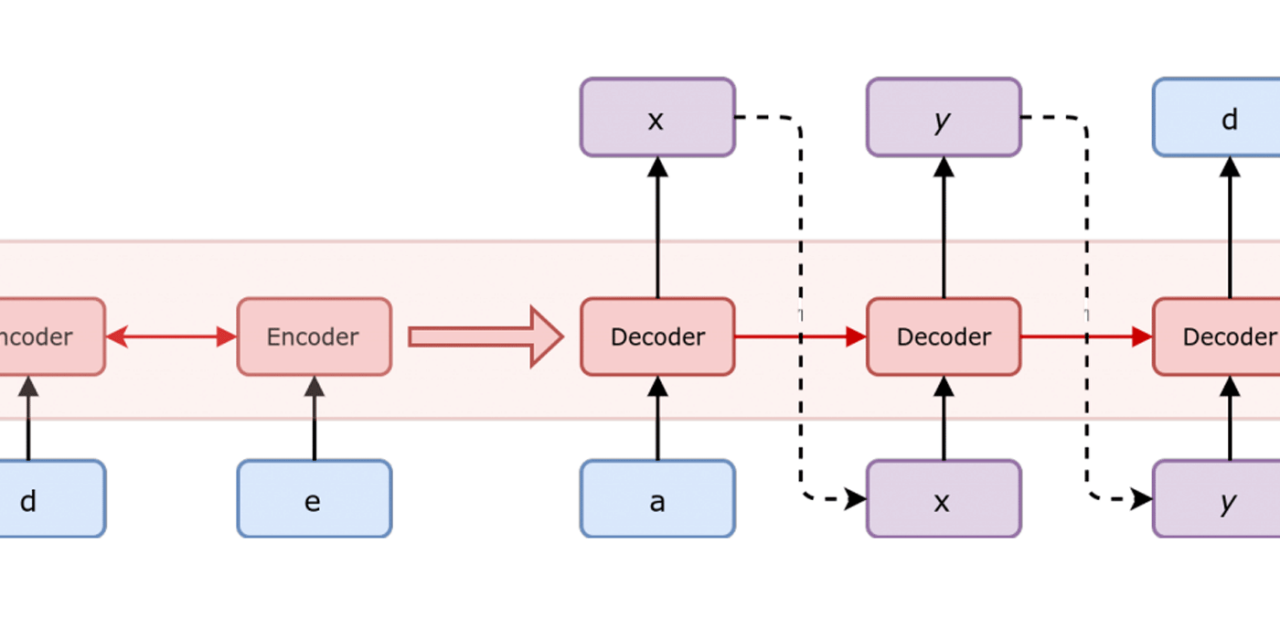

To enable our model to generalize to new classification tasks, we provide the model with a multiple choice question description containing each class in natural language, and train it to ...

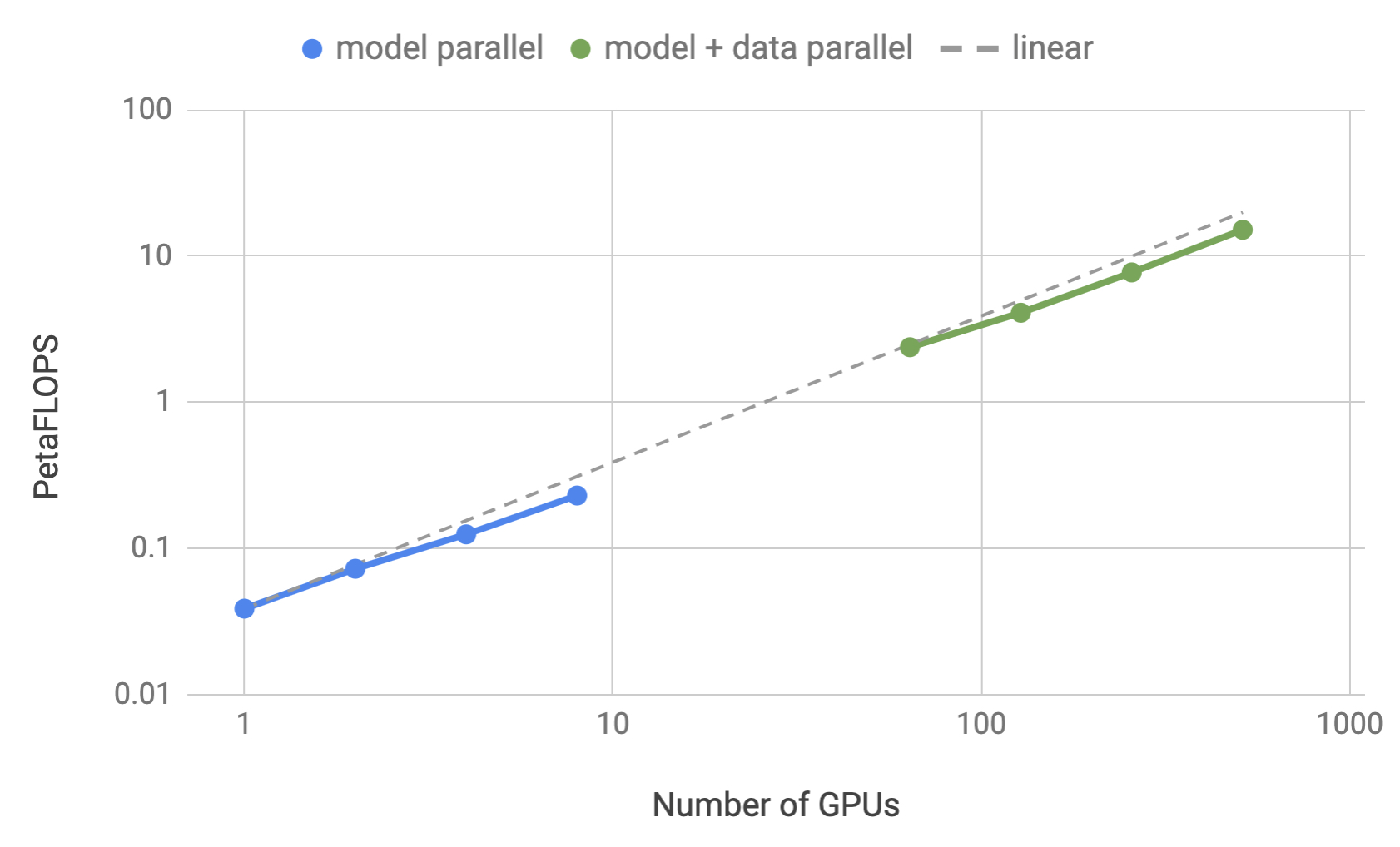

MegatronLM: Training Billion+ Parameter Language Models Using GPU Model Parallelism

We train an 8.3 billion parameter transformer language model with 8-way model parallelism and 64-way data parallelism on 512 GPUs, making it the largest transformer based language model ...

The Illustrated GPT-2 (Visualizing Transformer Language Models)

Discussions: Hacker News (64 points, 3 comments), Reddit r/MachineLearning (219 points, 18 comments) This year, we saw a dazzling application of machine learning. The OpenAI ...

AI, Analytics, Machine Learning, Data Science, Deep Learning Research Main Developments in 2019 and Key Trends for 2020

As we say goodbye to one year and look forward to another, KDnuggets has once again solicited opinions from numerous research & technology experts as to the most important developments of ...

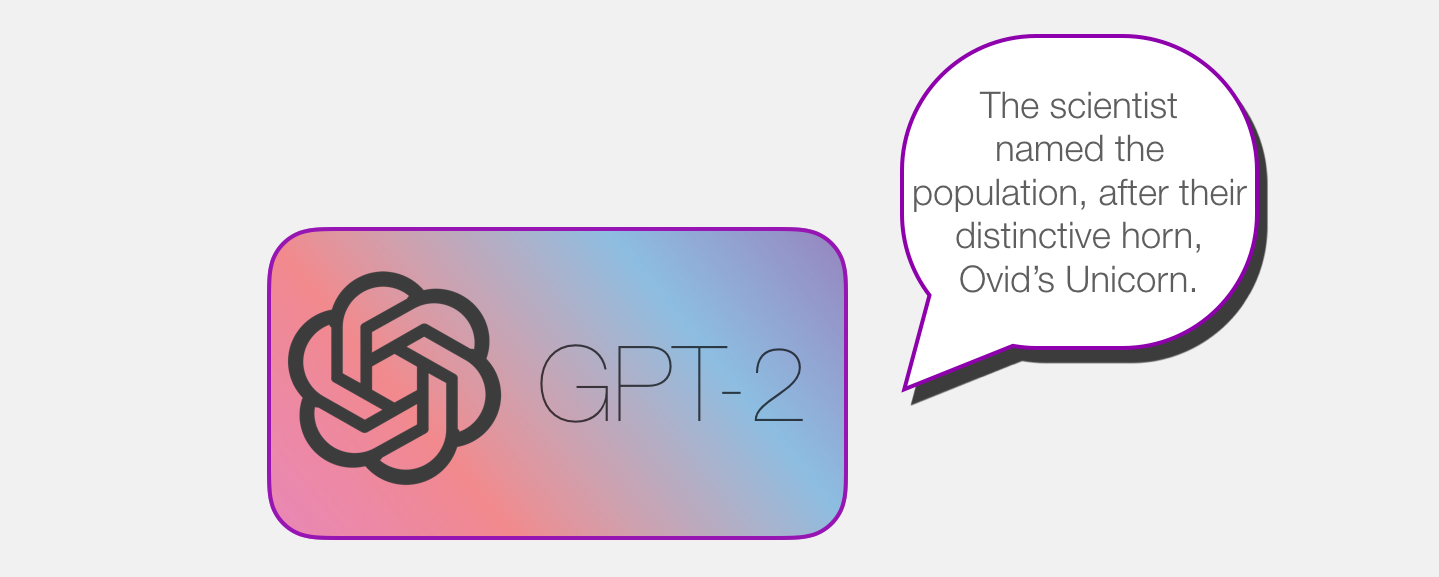

Language Models are Unsupervised Multitask Learners

Language Models are Unsupervised Multitask Learners Alec Radford * 1 Jeffrey Wu * 1 Rewon Child 1 David Luan 1 Dario Amodei ** 1 Ilya Sutskever ** 1 Abstract Natural language processing ...

Introducing spaCy v2.1

Version 2.1 of the spaCy Natural Language Processing library features a huge number of features, improvements and bug fixes. In this post, we highlight some of the features we're especially ...

Better Language Models and Their Implications

We’ve trained a large-scale unsupervised language model which generates coherent paragraphs of text, achieves state-of-the-art performance on many language modeling benchmarks, and performs ...

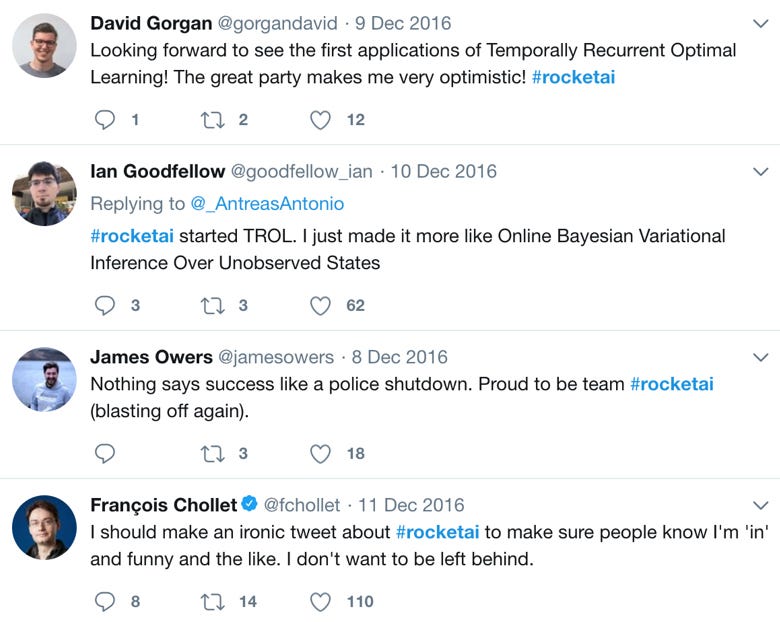

Staying Sane and Optimistic amid the AI hype

At NIPS 2016, there was an unprecedented story building up. Something that got every AI enthusiast agog about an unknown AI startup ‘Rocket AI’. The names asso…

The major advancements in Deep Learning in 2018

Deep Learning has changed the entire landscape over the past few years and its results are steadily improving. This article presents some of the main advances and accomplishments in Deep ...

OpenAI's Controversial New Language Model

In the inaugural TWiML Live, Sam Charrington is joined by Amanda Askell (OpenAI), Anima Anandkumar (NVIDIA/CalTech), Miles Bundage (OpenAI), Robert Munro (Li...