Top news of the week: 06.07.2022.

The Stanford Natural Language Processing Group

Towards Building Faithful Conversational Models Nouha Dziri, University of Alberta/Amii Venue: Zoom (link hidden) Abstract Conversational Models powered by large pre-trained …

Beyond neural scaling laws: beating power law scaling via data pruning

Widely observed neural scaling laws, in which error falls off as a power of the training set size, model size, or both, have driven substantial performance improvements in deep learning. ...

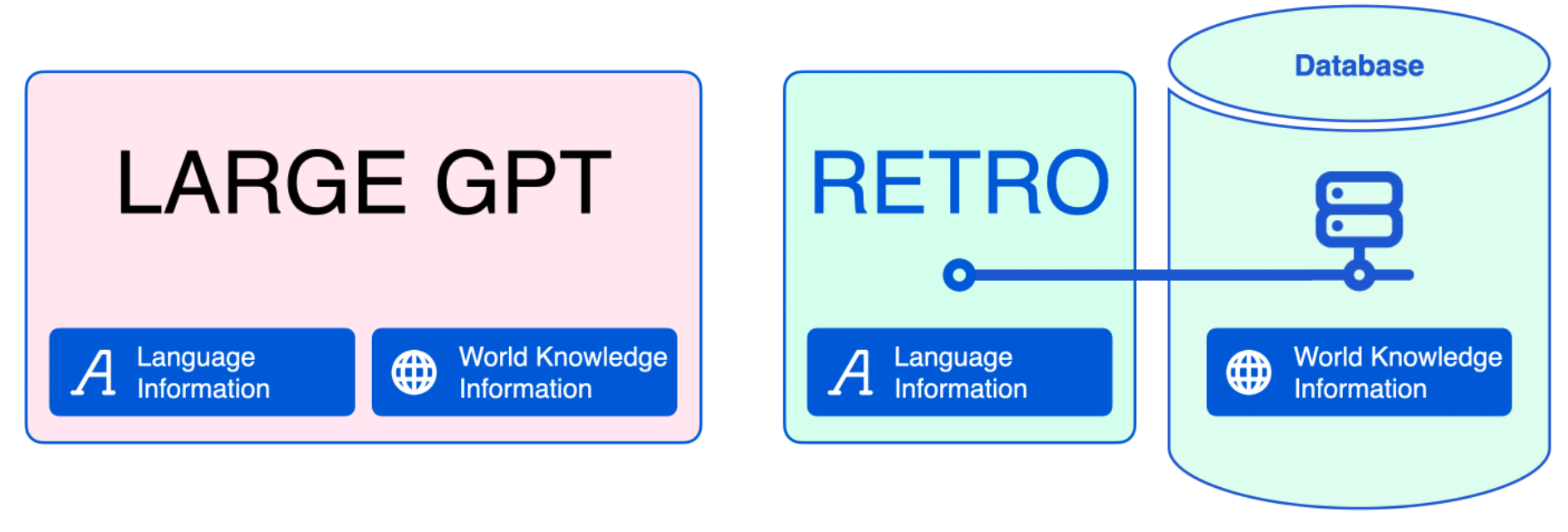

RETRO Is Blazingly Fast

When I first read Google’s RETRO paper, I was skeptical. Sure, RETRO models are 25x smaller than the competition, supposedly leading to HUGE savings in training and inference costs. But ...

[D] Industrial applications of causal representation learning

3 votes and 0 comments so far on Reddit

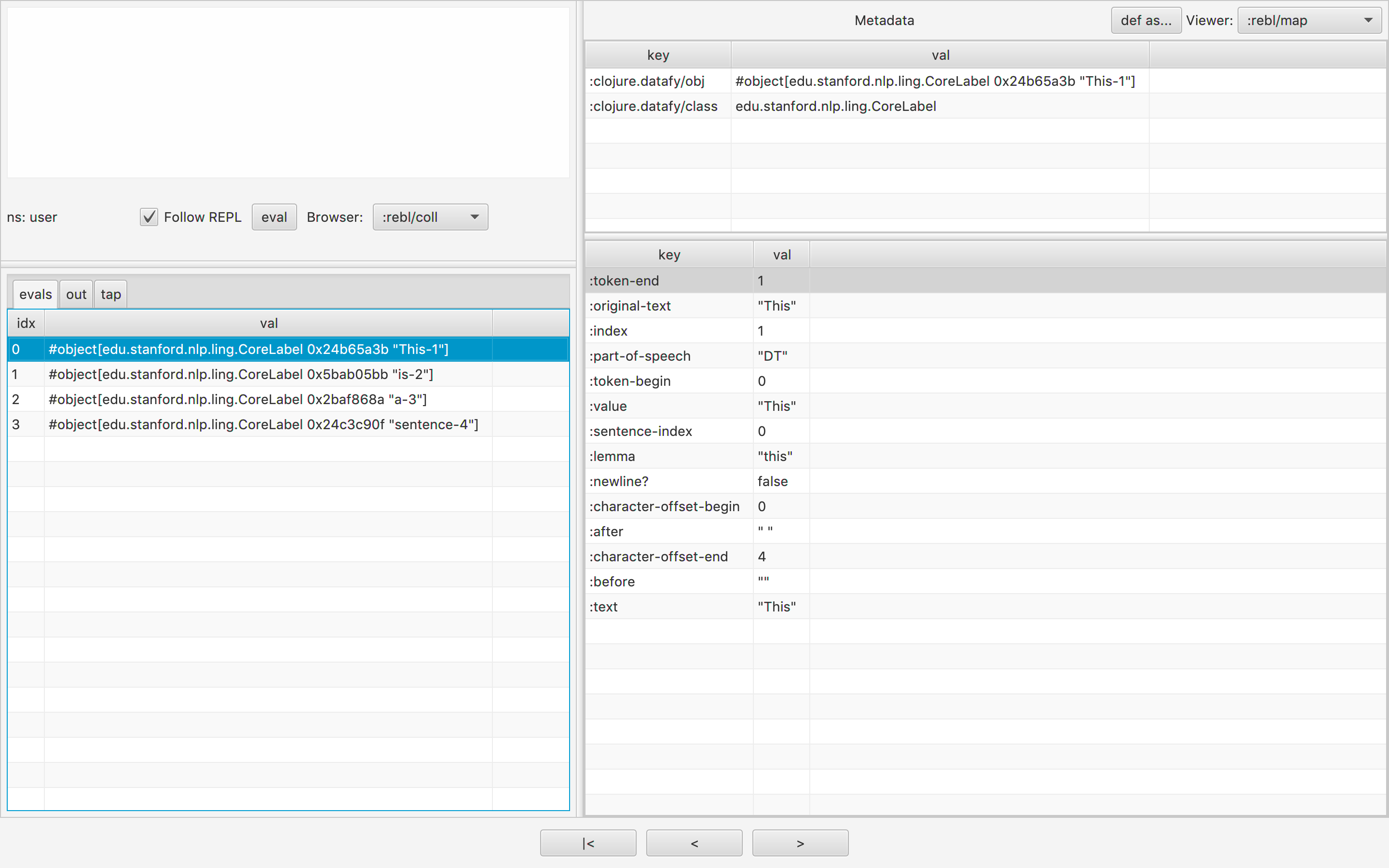

simongray/datalinguist

Stanford CoreNLP in idiomatic Clojure. Contribute to simongray/datalinguist development by creating an account on GitHub.

DALL·E Mega Model Card

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

Learning Controllable 3D Level Generators

Procedural Content Generation via Reinforcement Learning (PCGRL) foregoes the need for large human-authored data-sets and allows agents to train explicitly on functional constraints, using ...

![[D] Industrial applications of causal representation learning](https://external-preview.redd.it/vF5amVzR6zyBE_qYr73WDoHeSXP67N9F7fRWOEPv9hU.jpg?auto=webp&s=5cc2b64d7b3d01d6ed206718fc5cfb43ce4ed1b9)