CUDA, Nvidia, GPGPU, Graphics processing unit, Cloud computing, Neural network

Simplifying and Accelerating Machine Learning Predictions in Apache Beam with NVIDIA TensorRT

Loading and preprocessing data for running machine learning models at scale often requires seamlessly stitching the data processing framework and inference engine together. In this post…

Simplifying and Accelerating Machine Learning Predictions in Apache Beam with NVIDIA TensorRT

Loading and preprocessing data for running machine learning models at scale often requires seamlessly stitching the data processing framework and inference engine together. In this post…

We are sorry, we could not find the related article

If you are curious about Artificial Intelligence and Research

Please click on:

Subscribe to Artificial Intelligence - Research

Building a Question and Answering Service Using Natural Language Processing with NVIDIA NGC and Google Cloud

NVIDIA GTC provides training, insights, and direct access to experts. Join us for breakthroughs in AI, data center, accelerated computing, healthcare, game development, networking, and more.

NVIDIA Brings Affordable GPU to the Edge with Jetson Nano

Jetson Nano is the latest addition to NVIDIA’s Jetson portfolio. The $99 developer kit powered by the Jetson Nano module packs a lot of punch.

TensorFlow, PyTorch or MXNet? A comprehensive evaluation on NLP & CV tasks with Titan RTX

Thanks to the CUDA architecture [1] developed by NVIDIA, developers can exploit GPUs’ parallel computing power to perform general computation without extra efforts. Our objective is to ...

High performance inference with TensorRT Integration

Posted by: Pooya Davoodi (NVIDIA), Guangda Lai (Google), Trevor Morris (NVIDIA), Siddharth Sharma (NVIDIA)

Learn how to set up a GPU-enabled virtual server instance (VSI) on a Virtual Private Cloud (VPC) and deploy RAPIDS using IBM Schematics.

Learn how to set up a GPU-enabled virtual server instance (VSI) on a Virtual Private Cloud (VPC) and deploy RAPIDS using IBM Schematics....

Accelerating Deep Learning on the JVM with Apache Spark and NVIDIA GPUs

In this article, authors discuss how to use the combination of Deep Java Learning (DJL), Apache Spark v3, and NVIDIA GPU computing to simplify deep learning pipelines while improving ...

Deploy RAPIDs on GPU-Enabled Virtual Servers on IBM Cloud Virtual Private Cloud

Learn how to set up a GPU-enabled virtual server instance (VSI) on a Virtual Private Cloud (VPC) and deploy RAPIDS using IBM Schematics.

How to Use NVIDIA GPU Accelerated Libraries

If you are wondering how you can take advantage of NVIDIA GPU accelerated libraries for your AI projects, this guide will help answer questions and get you started on the right path.

Dynamic Neural Networks: Tape-Based Autograd

Tensors and Dynamic neural networks in Python with strong GPU acceleration - pytorch/pytorch

Google Cloud launches Deep Learning Containers in beta

Google Cloud Platform's Deep Learning Containers offer environments for virtual machines to test or deploy machine learning-fueled apps or services.

Deepset achieves a 3.9x speedup and 12.8x cost reduction for training NLP models by working with AWS and NVIDIA

This is a guest post from deepset (creators of the open source frameworks FARM and Haystack), and was contributed to by authors from NVIDIA and AWS. At deepset, we’re building the ...

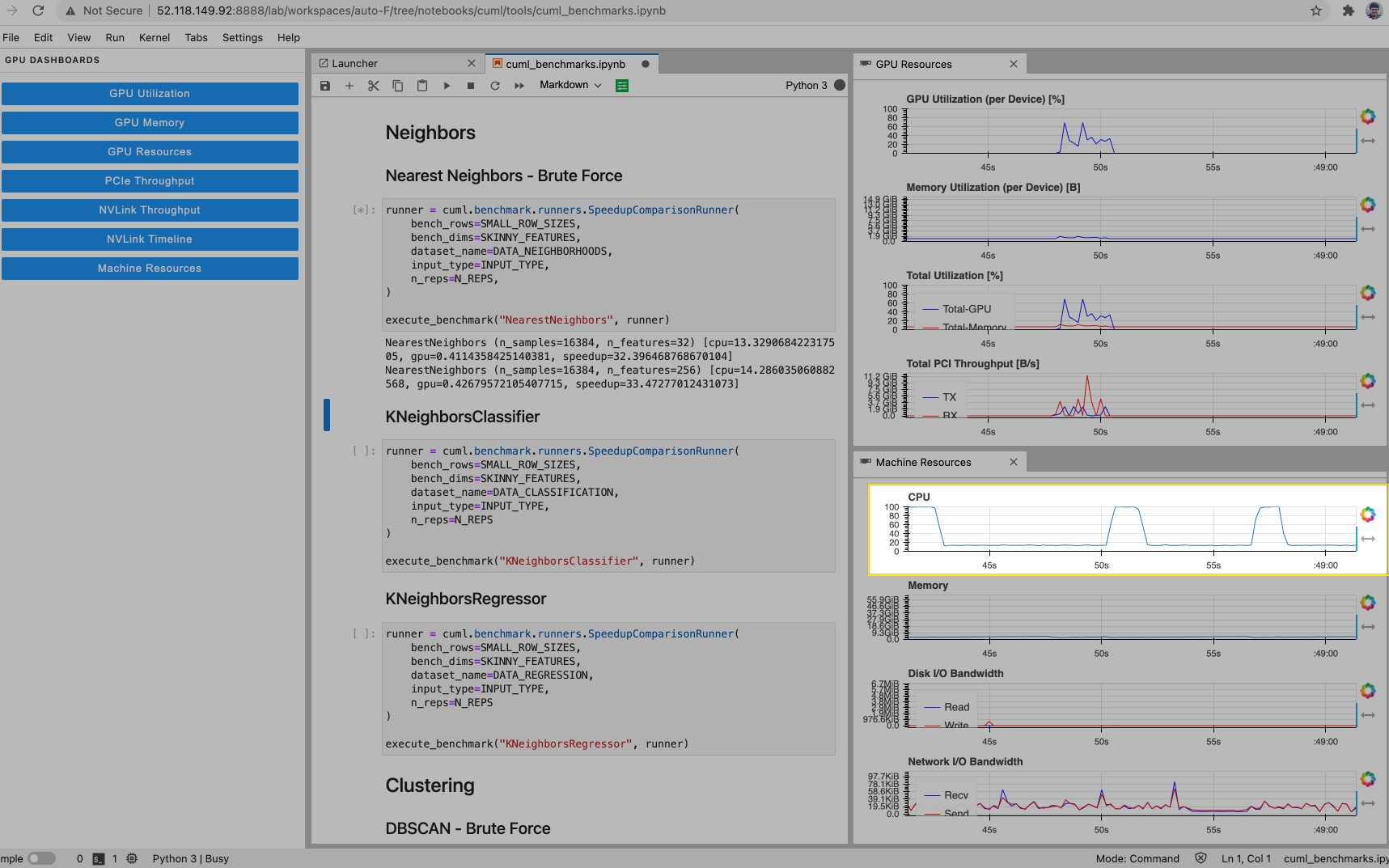

Scale model training in minutes with RAPIDS + Dask and NVIDIA GPUs on AI Platform

Scale model training from 10 GB to 640 GB in minutes with RAPIDS + Dask and NVIDIA GPUs on AI Platform

Nvidia RAPIDS accelerates analytics and machine learning

New open source libraries from Nvidia provide GPU acceleration of data analytics an machine learning. Company claims 50x speed-ups over CPU-only implementations.

Microsoft powers transformation at NVIDIA’s GTC Digital Conference

The world of supercomputing is evolving. Work once limited to high-performance computing (HPC) on-premises clusters and traditional HPC scenarios, is now being performed at the edge, ...