Personal computer, Mac OS X, MacBook, Central processing unit, Microprocessor, Graphics processing unit

Introducing Accelerated PyTorch Training on Mac

In collaboration with the Metal engineering team at Apple, we are excited to announce support for GPU-accelerated PyTorch training on Mac. Until now, PyTorch training on Mac only leveraged the CPU, but with the upcoming PyTorch v1.12 release, developers and researchers can take advantage ...

Introducing Accelerated PyTorch Training on Mac

In collaboration with the Metal engineering team at Apple, we are excited to announce support for GPU-accelerated PyTorch training on Mac. Until now, PyTorch training on Mac only leveraged ...

We are sorry, we could not find the related article

If you are curious about Artificial Intelligence and Applied Use Cases

Please click on:

Subscribe to Artificial Intelligence - Applied Use Cases

Apple's M1 Ultra Chip: Everything You Need to Know

Apple in March 2022 introduced its most powerful Apple silicon chip to date, the M1 Ultra, which is designed for and used in the Mac Studio. This...

Steve Jobs’s last gambit: Apple’s M1 Chip

Even as Apple’s final event of 2020 gradually becomes a speck in the rearview mirror, I can’t help continually thinking about the new M1 chip that debuted there. I am, at heart, an optimist ...

A Hardware Chip Aids Tensor Machine Learning Software Applications

Google’s tensor processing unit has the potential to do more in n-multidimensional mathematics.

Docker Inc. Brings Desktop Dev Tools to M1-Based Macs

Docker Desktop for Mac is now generally available for macOS systems based on an M1 system-on-a-chip (SoC).

Introducing M1 Pro and M1 Max: the most powerful chips Apple has ever built

Apple today announced M1 Pro and M1 Max, the next breakthrough chips for the Mac.

Are The Highly-Marketed Deep Learning and Machine Learning Processors Just Simple Matrix-Multiplication Accelerators?

Artificial intelligence (AI) accelerators are computer systems designed to enhance artificial intelligence and machine learning applications, including artificial neural networks ...

SLIDE : In Defense of Smart Algorithms over Hardware Acceleration for Large-Scale Deep Learning Systems

Deep Learning (DL) algorithms are the central focus of modern machine learning systems. As data volumes keep growing, it has become customary to train large neural networks with hundreds of ...

Cybercriminals Unleashing Malware for Apple M1 Chip

Patrick Wardle found that some macOS malware written for the new M1 processor can bypass anti-malware tools.

CPU vs GPU in Machine Learning

Data scientist and analyst Gino Baltazar goes over the difference between CPUs, GPUs, and ASICS, and what to consider when choosing among these.

Easily Deploy Deep Learning Models in Production

Running multiple models on a single GPU will not automatically run them concurrently to maximize GPU utilization. You can download TensorRT Inference Server as a container from NVIDIA NGC ...

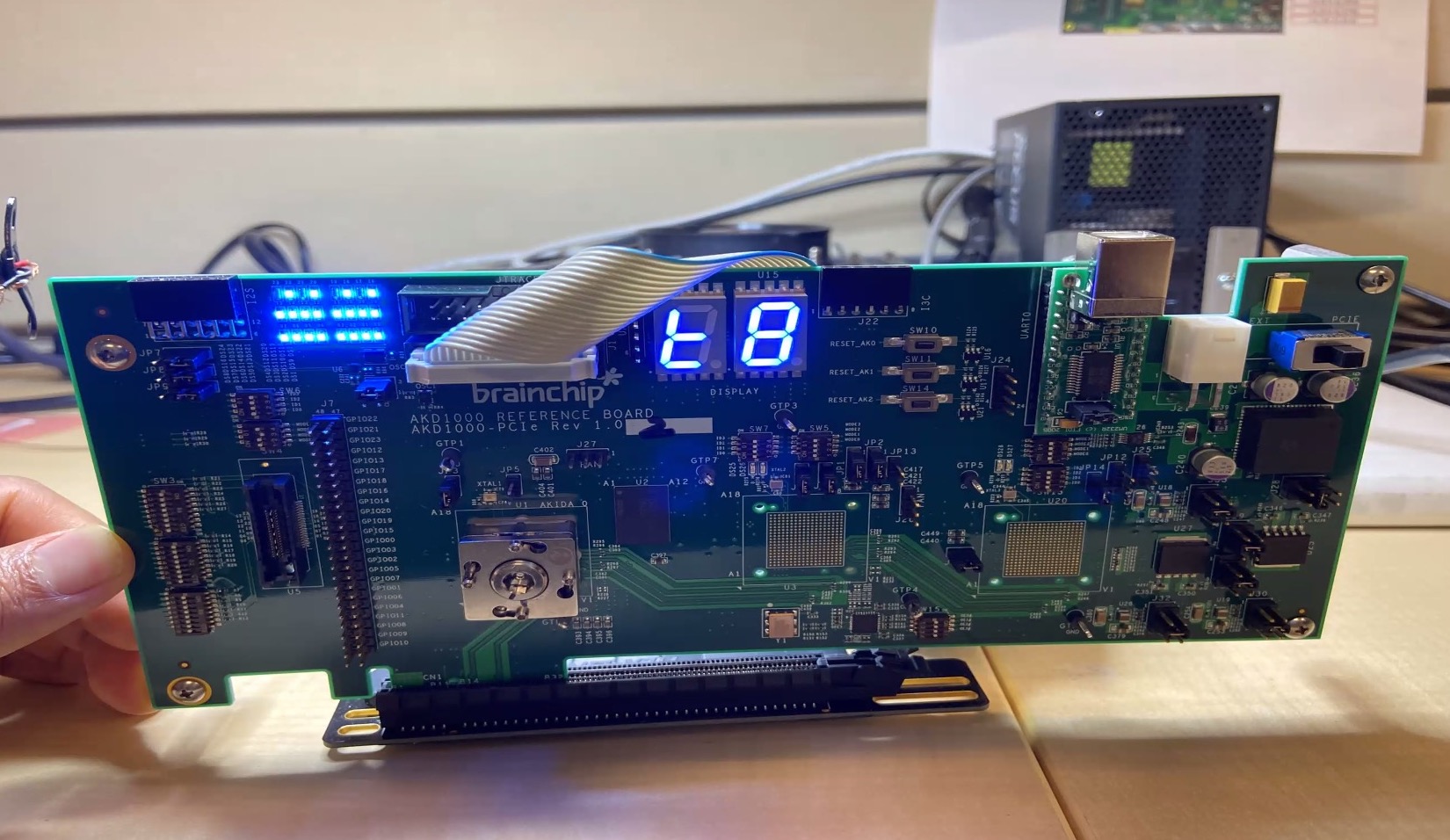

BrainChip Brings AI to the Edge and Beyond

Until now, Artificial Intelligence processing has been a centralized function. It featured massive systems with thousands of processors working in parallel. But researchers have discovered ...

Apple's follow-up to M1 chip goes into mass production for Mac

TSMC-made chipsets to replace Intel offerings in laptops set to launch in 2H

Modern Robotics Compute Architectures – Flexibility, Optimization is Key

Robot behaviors are often built as computational graphs, with data flowing from sensors to compute technologies, all the way down to actuators and back. To obtain additional performance, ...

Fusion on Apple Silicon: Progress Update

It’s been a few months since our informal announcement via Twitter back in November where we committed to delivering VMware VMs on Apple silicon devices, so we wanted to take this ...

Apple Wants To Do It All (And Screw All Its Vendors)

Ahead of its 2019 Worldwide Developer Conference (rumored to be June 3-8th), the Apple may finally be moving toward a unified platform strategy between iOS and MacOS. Is it time for an ...

Hot Chips Going Full Stream With ML Content

2020 Hot Chips starting next Sunday won't be held at Stanford Univ. Although it goes virtual, there’s no let up in content. Here's a skinny on what you might expect from many of the leading ...

Artificial Intelligence Uses a Computer Chip Designed for Video Games. Does That Matter?

Nearly all AI systems run on GPU chips that are designed for video gaming — what are the consequences does this have for the IT sector?