Das Model, Input, Input/output, Output, Autoregressive model, Neural network

Autoregressive long-context music generation with Perceiver AR

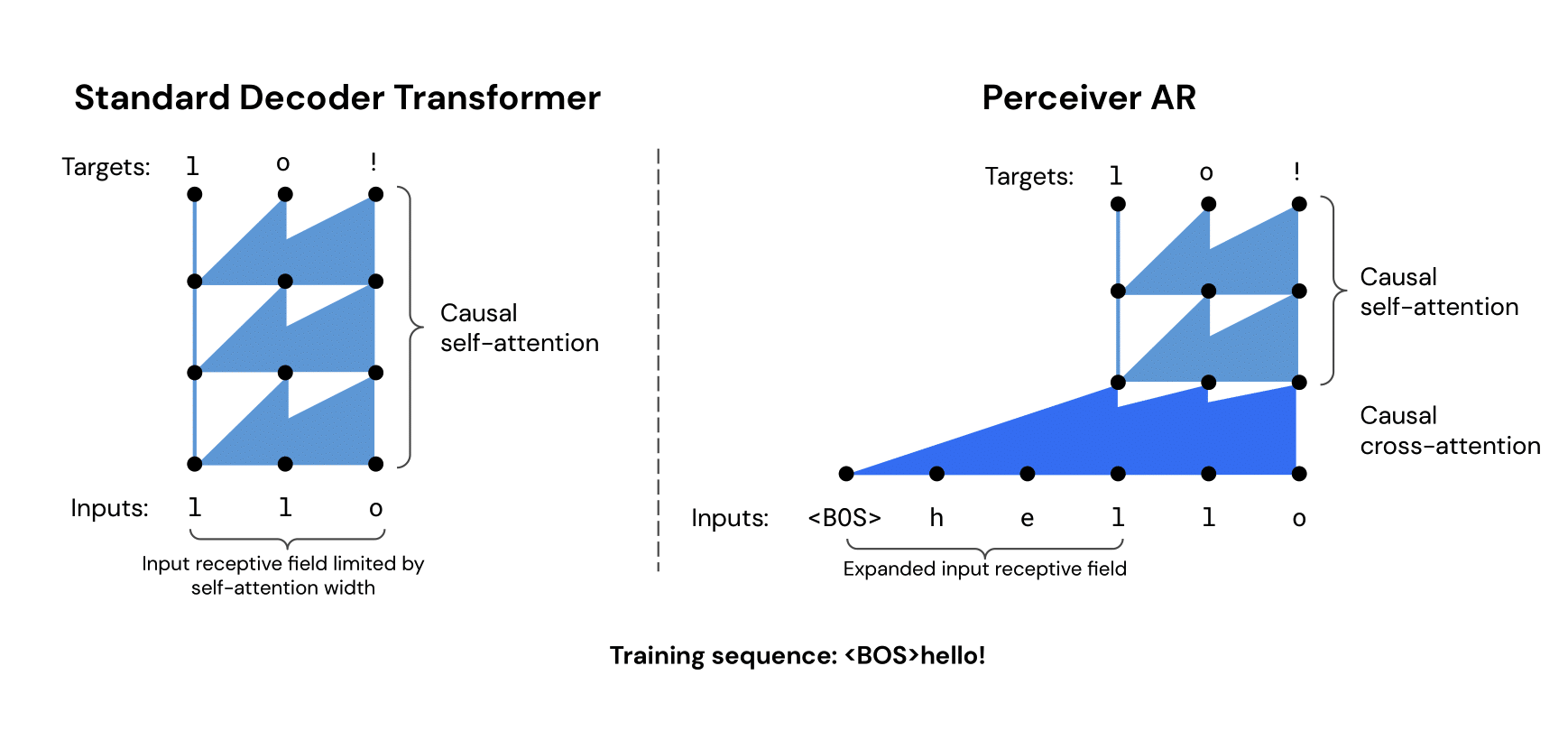

We present our work on music generation with Perceiver AR, an autoregressive architecture that is able to generate high-quality samples as long as 65k tokens...

Autoregressive long-context music generation with Perceiver AR

We present our work on music generation with Perceiver AR, an autoregressive architecture that is able to generate high-quality samples as long as 65k tokens...

We are sorry, we could not find the related article

If you are curious about Artificial Intelligence and Applied Use Cases

Please click on:

Subscribe to Artificial Intelligence - Applied Use Cases

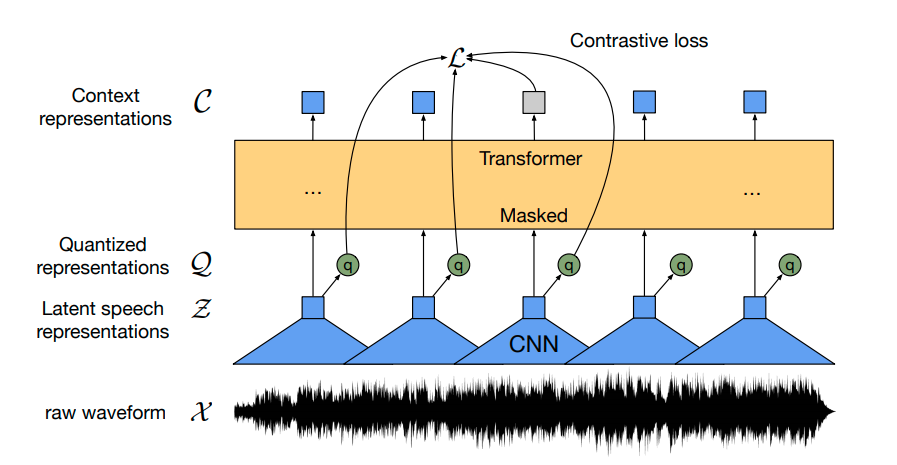

Generating music in the waveform domain

This is a write-up of a presentation on generating music in the waveform domain, which was part of a tutorial that I co-presented at ISMIR 2019 earlier this month.

Glow: Better Reversible Generative Models

We introduce Glow, a reversible generative model which uses invertible 1x1 convolutions. It extends previous [https://arxiv.org/abs/1410.8516] work [https://arxiv.org/abs/1605.08803] on ...

Deep Learning For Symbolic Mathematics

Neural networks have a reputation for being better at solving statistical or approximate problems than at performing calculations or working with symbolic data. In this paper, we show that ...

Marantz Announces New Amplifier With Network Player. Just Add Speakers!

The new Marantz MODEL 40n is an integrated stereo amplifier with streaming capabilities and a beautiful new design.

Click here to read the article

Whether evaluating arithmetic or Boolean circuits, the approach is the same: in an offline pre- computation phase, the participants generate correlated randomness (or receive it from a ...

guillefix / transflower-lightning

multimodal transformer. Contribute to guillefix/transflower-lightning development by creating an account on GitHub.

Fine-Tune Wav2Vec2 for English ASR with 🤗 Transformers

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

WaveFlow: A Compact Flow-Based Model for Raw Audio

In recent years, deep neural network has obtained noticeable successes for synthesizing raw audio in high-fidelity speech and music generation. Many researchers from various organizations ...

Imputer: Sequence Modelling via Imputation and Dynamic Programming

This paper presents the Imputer, a neural sequence model that generates output sequences iteratively via imputations. The Imputer is an iterative generative model, requiring only a constant ...

Non_Interactive – Software & ML

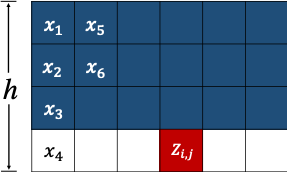

One nifty thing about this set-up is that I can now concatenate the diffusion inputs with the conditioning signal from the discretized audio signal and feed the whole thing into the ...

ECCV 2020: Some Highlights

The 2020 European Conference on Computer Vision took place online, from 23 to 28 August, and consisted of 1360 papers, divided into 104 orals, 160 spotlights and the rest of 1096 papers as ...

Review — Attention Is All You Need (Transformer)

Using Transformer, Attention is Drawn, Long-Range Dependencies are Considered, Outperforms ByteNet, Deep-Att, GNMT, and ConvS2S

Bidirectional Encoder Representations from Transformers

Following NeurIPS19, here are the trends Graphcore are seeing in AI research – and what this means for how we process machine learning models in future.

Learning Distributed Word Representations with Neural Network: an implementation from scratch in Octave

In this article, the problem of learning word representations with neural network from scratch is going to be described. This problem appeared as an assignment…

Keras RNN API

model.summary() Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ...