CUDA, Nvidia, GPGPU, Scalable Link Interface, ATI Technologies, Stream processing

RT @adamlikesai: Great work, @vielmetti! Check out @deepwavedigital and their gr-wavelearner OOT module designed to wrap @NVIDIA TensorRT engines for RF neural network inferencing. https://t.co/wDWxiQ0tXm https://t.co/MZid0NkfAV

OpenGR-WAVELEARNER

Perform deep learning inference on signals. Contribute to deepwavedigital/gr-wavelearner development by creating an account on GitHub.

RT @adamlikesai: Great work, @vielmetti! Check out @deepwavedigital and their gr-wavelearner OOT module designed to wrap @NVIDIA TensorRT engines for RF neural network inferencing. https://t.co/wDWxiQ0tXm https://t.co/MZid0NkfAV

OpenGR-WAVELEARNER

Perform deep learning inference on signals. Contribute to deepwavedigital/gr-wavelearner development by creating an account on GitHub.

We are sorry, we could not find the related article

If you are curious about Artificial Intelligence News Essentials and General News

Please click on:

Or signup to our newsletters

tensorflow_cc

Build and install TensorFlow C++ API library. Contribute to FloopCZ/tensorflow_cc development by creating an account on GitHub.

Nvidia RAPIDS accelerates analytics and machine learning

New open source libraries from Nvidia provide GPU acceleration of data analytics an machine learning. Company claims 50x speed-ups over CPU-only implementations.

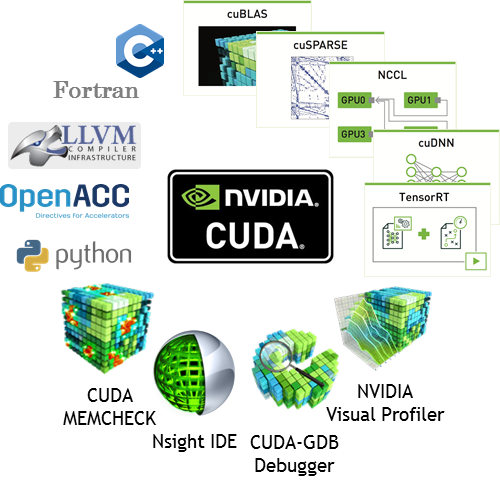

CUDA 10 Features Revealed: Turing, CUDA Graphs and More

CUDA 10 supports the new Turing architecture, including added Tensor Core data types, CUDA graphs, and improved analysis tools

New NVIDIA Data Center Inference Platform to Fuel Next Wave of AI-Powered Services

GTC Japan -- Fueling the growth of AI services worldwide, NVIDIA today launched an AI data center platform that delivers the industry’s most advanced inference acceleration for voice, ...

First, Google touts $150 AI dev kit. Now, Nvidia's peddling a $99 Nano for GPU ML tinkerers. Do we hear $50? $50?

It only took three fscking hours of keynote to announce it – where's the GPU optimization for that?

What you need to do deep learning

In the last 20 years, the video gaming industry drove forward huge advances in GPUs (graphical processing units), used to do the matrix math needed for rendering graphics. The status of ...

TensorFlow, PyTorch or MXNet? A comprehensive evaluation on NLP & CV tasks with Titan RTX

Thanks to the CUDA architecture [1] developed by NVIDIA, developers can exploit GPUs’ parallel computing power to perform general computation without extra efforts. Our objective is to ...